Sound in an immersive environment

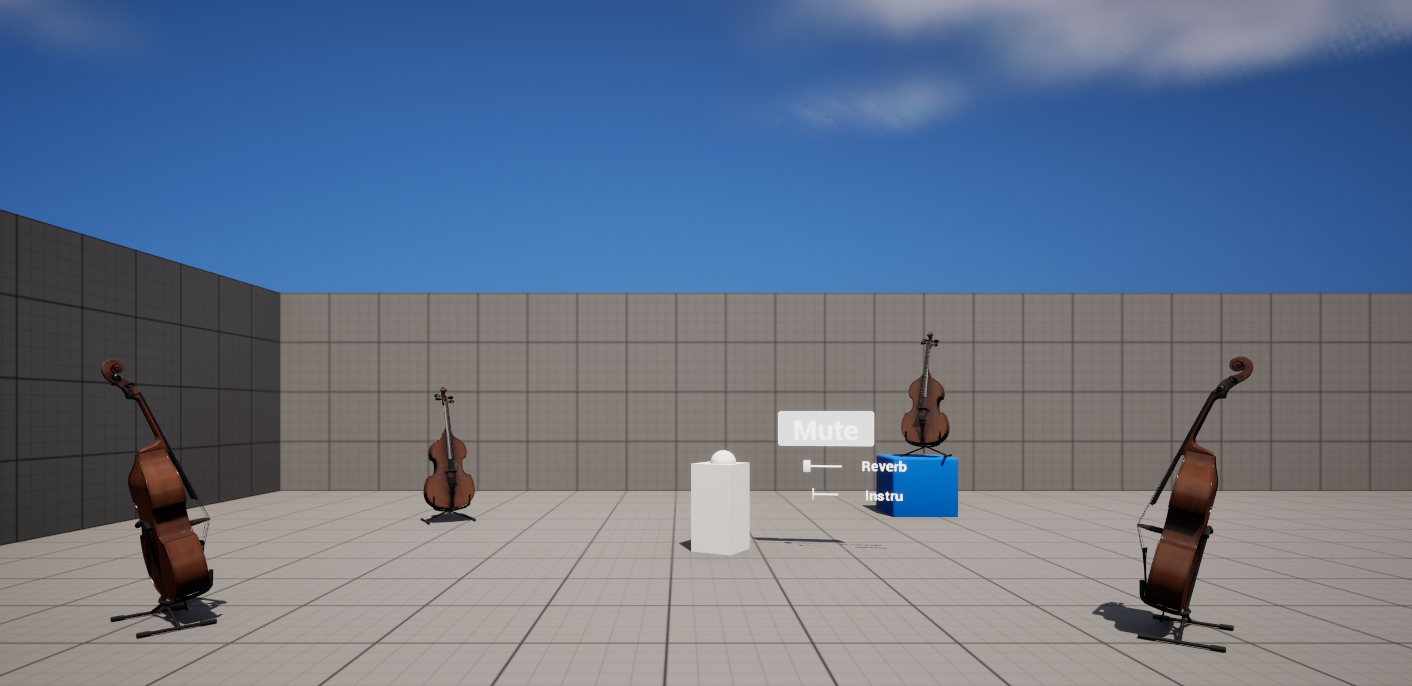

In order to illustrate different sound properties such as room effects, localization or moving sounds, we had to take an interest in important facets of sound: ambisonics, binaural sound and reverberation.

It is thanks to the NoiseMakers plugin that we will be able to integrate these properties into Unreal Engine.

Important sound properties

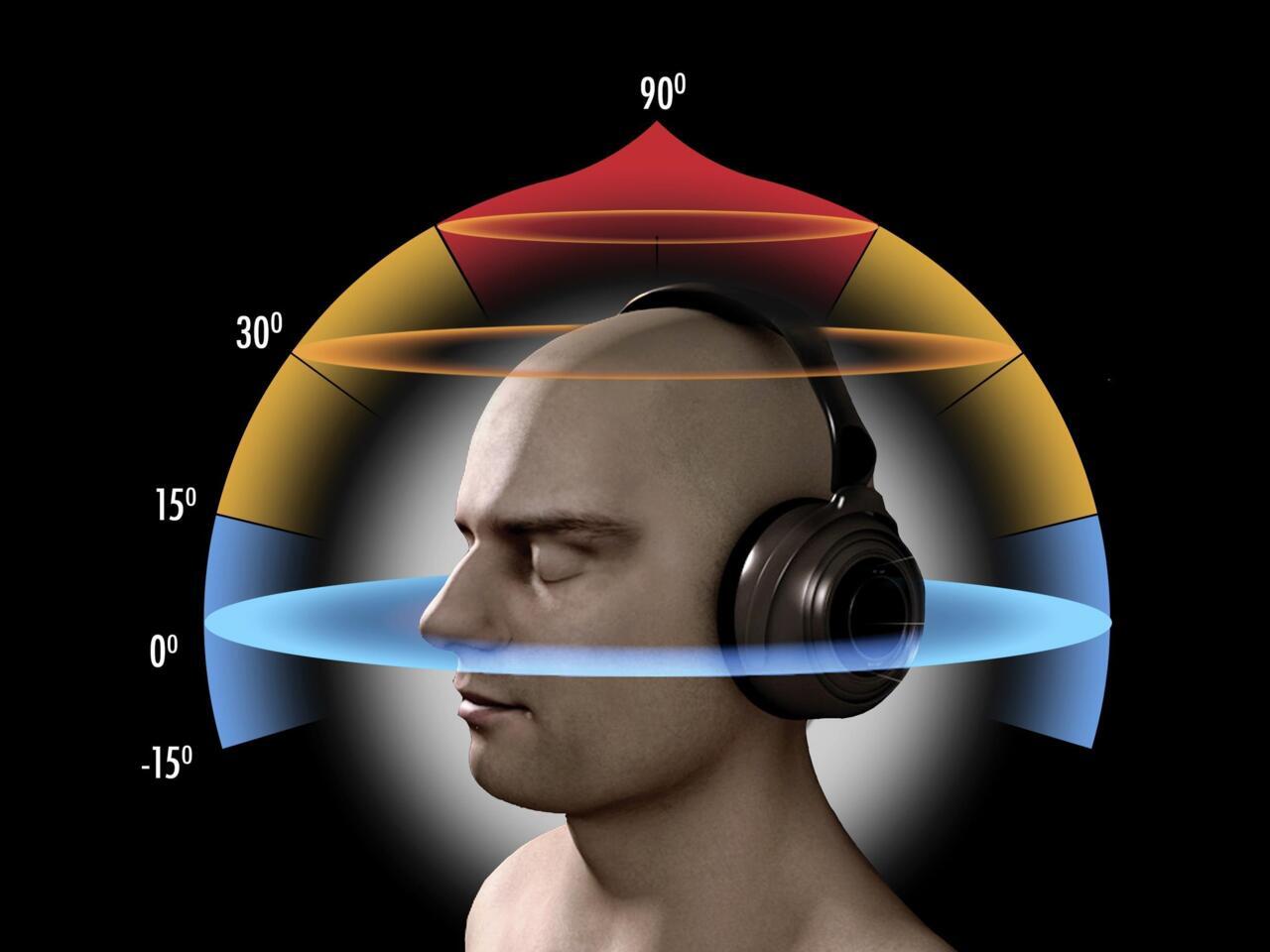

Binaural

A binaural sound applies filters simulating the differences in time, intensity and timbre between the ears, allowing a more realistic perception of the sound direction.

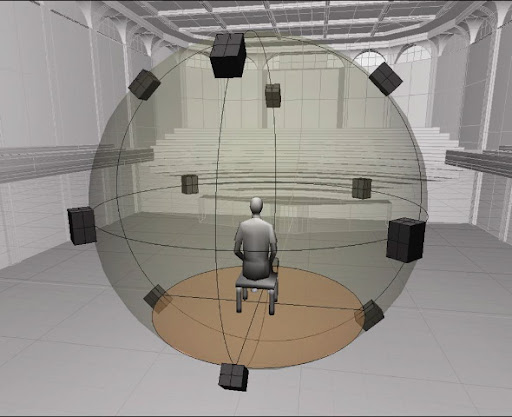

Ambisonic

An ambisonic sound is a recording and playback technique that captures a three-dimensional sound scene, allowing a 360° immersion.

It encodes the direction and depth of the sound, offering a more realistic spatialization.

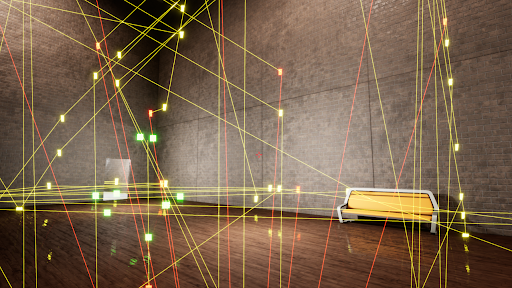

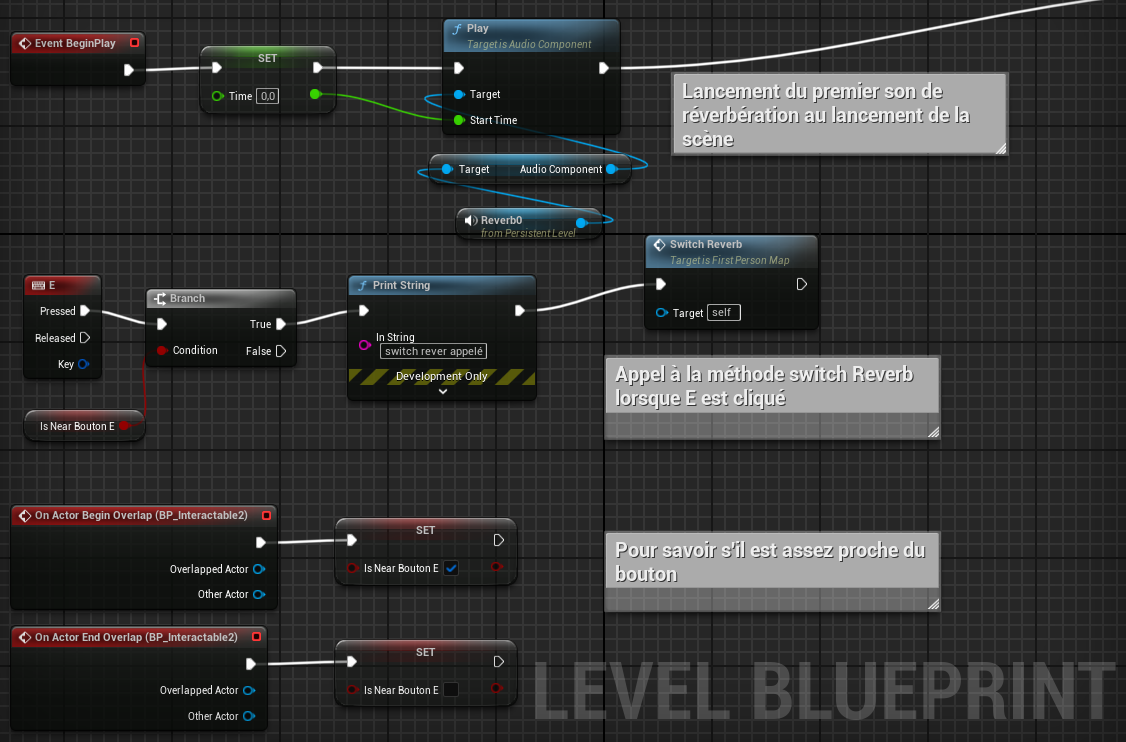

Reverberation

The reverberation is the phenomenon of sound reflection on the surfaces of an environment, creating a succession of echoes extending the initial sound.

It allows to perceive the space and the distance of a sound source, playing a key role in the sensation of immersion.

Unreal Engine

It is a cutting-edge graphics engine focused on realistic graphics that allows the development of video games, simulations, architectural visualizations and VR. It is proprietary and developed by the company Epic Games. This engine is free, but takes a share of the revenue generated beyond a certain amount.

See moreDeploy Unreal Engine in Immersia

Our objective

Until now, Immersia worked exclusively with the Unity engine. A large part of our project consists in deploying Unreal Engine in Immersia.

Unreal Engine for Immersia

The researchers at Immersia wish to diversify the possibilities of the platform by deploying a new engine.

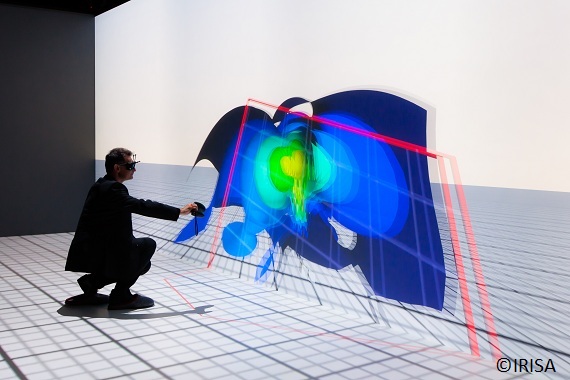

Immersia, how is it working?

The platform is made up of an immersive system composed of 14 projectors spread over 4 screens. The whole forms a rectangular parallelepiped totaling 76m² of projected surface. The system also consists of 7 computers, a spatialized sound system, stereoscopic glasses and a 360° motion tracker system.

The challenge is to get all the components of Immersia to work together with Unreal Engine as the engine.

How do we use Unreal Engine?

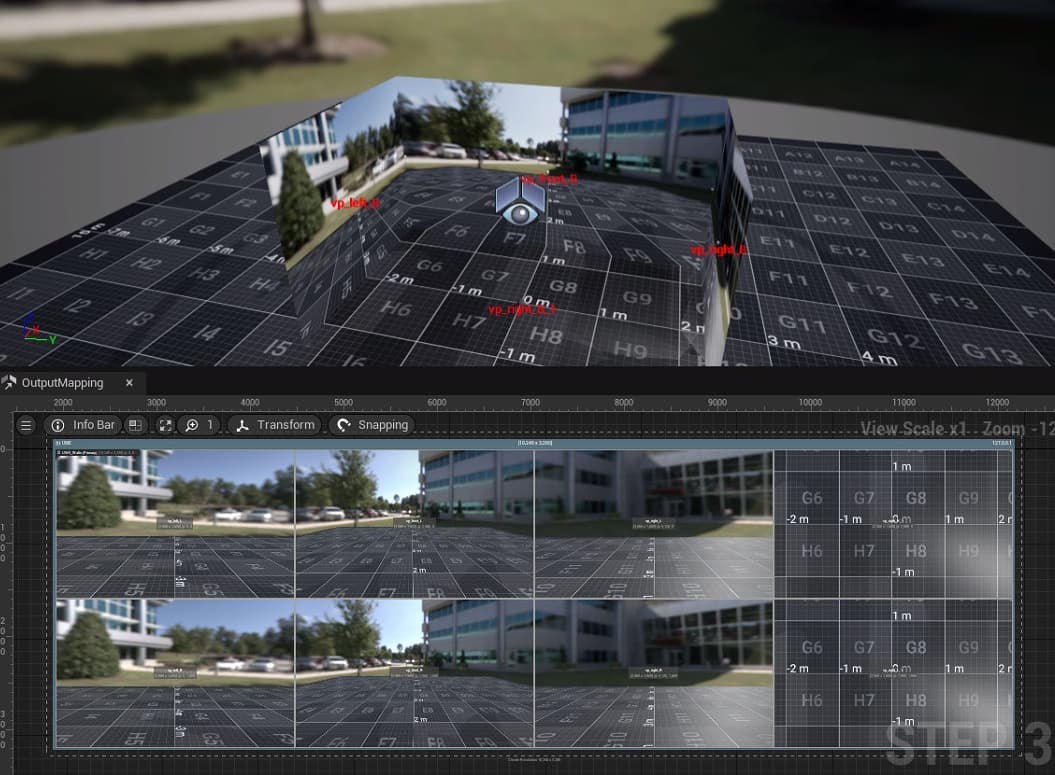

nDisplay

nDisplay is a plugin that allows you to display a scene on multiple computers. It is essential for the operation of an Unreal Engine scene on a platform composed of multiple computers. Indeed, in the case of multiple devices, it is necessary for each of them to render part of the image, not its entirety. However, this poses different constraints, because it is therefore imperative that the displays are synchronized with each other, and that each computer knows which part of the image it must display in real time. nDisplay will therefore take on this role and take care of the distribution of the scene images.

Scene

In Unreal Engine, a scene represents the virtual environment in which a project takes place. It is composed of a set of objects called Actors, which include elements such as 3D models, lights, cameras, and special effects. The scene is defined in a Level, which serves as the main container for organizing these objects and their interactions. Using the level editor, developers can arrange and customize the scene to create immersive and interactive worlds.

Blueprints

Blueprints are a visual scripting language that allows you to create game mechanics and interactions without writing C++ code. Blueprints implement an object-oriented event-driven programming model that integrates all the usual algorithmic primitives (conditions, loops, ...). A Blueprint code is composed of nodes and links between them. When a scene event triggers the first node of a program, a signal traverses the links and activates the traversed nodes. In reality, a Blueprint script refers to underlying C++ functions or classes, but the nodes we manipulate are not simple C++ code. The code is first transformed, at compilation, into very verbose and unreadable C++ code, and then transformed into bytecode executable by the Unreal Engine virtual machine.